How do you actually use DORA Metrics?

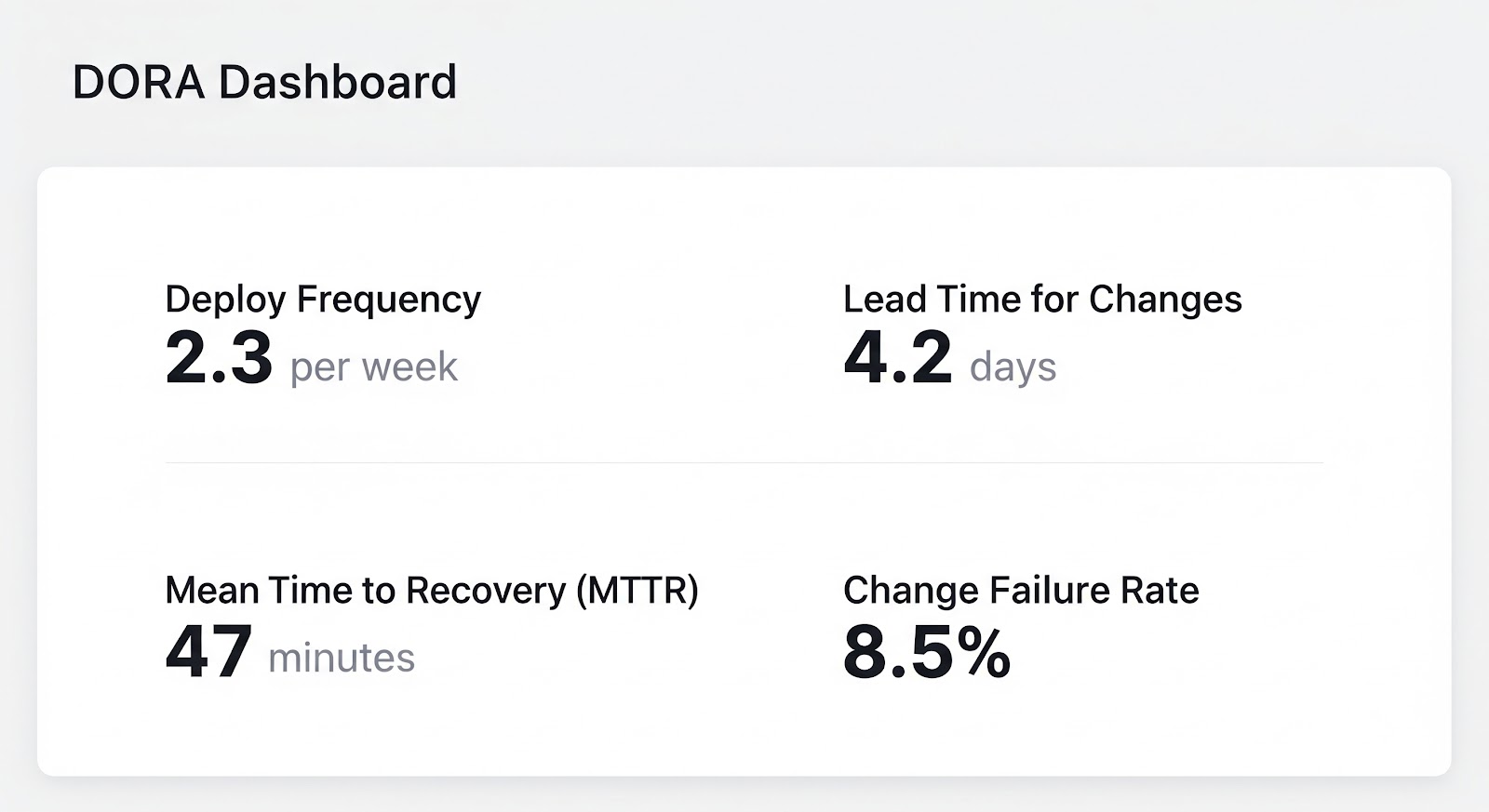

It's 3 PM on a Tuesday, and you're staring at your beautifully designed DORA metrics dashboard:

The numbers seem accurate, but you're left wondering…what do I actually do with this information?

If this sounds familiar, you're not alone.

You've built or purchased a product, spent days, weeks, or months setting up integrations, fixing data issues, and working with users. Now you need to understand how to actually get value from this data.

Here's how to turn those numbers into meaningful improvements and set realistic goals that make a real difference.

Understanding your data and its limitations

First things first: you need to understand what you're actually measuring. Remember the old adage: "Garbage in, garbage out." You need accurate and consistent data to draw good conclusions, which means taking time to validate your data sources and understand their limitations.

When examining your metrics, focus on trends over time rather than isolated snapshots. Establish your baseline by understanding where you were, where you are now, and where you're heading. This view over time gives you the context you need to make smart decisions about what to improve.

However, don't fall into the trap of focusing solely on raw numbers. DORA metrics are inherently relative to your organization, team structure, processes, and the software being built. They're not meant to be compared against industry benchmarks without considering your unique context. For example, a team maintaining a legacy mainframe system might deploy weekly and that could be excellent progress, while a team working on a modern microservice might deploy multiple times per day. A 4-hour lead time for a team that requires extensive compliance review processes might be outstanding, while the same metric for a team with automated testing could indicate serious bottlenecks.

Similarly, your change failure rate might look concerning at 15%, but if your team is experimenting with new deployment techniques or working on a particularly complex migration, that context completely changes how you should interpret the number.

The key point here is that DORA metrics alone cannot pinpoint the root cause of issues. While they might highlight potential areas for investigation, they're indicators, not explanations. You'll need to dig deeper to understand whether an apparent problem represents an actual area for improvement, indicates a data quality issue, or simply reflects the reality of working with a particular team or technology stack.

Setting meaningful goals

Effective DORA metrics implementation requires setting achievable improvement goals based on your current metrics and organizational context. Don't chase arbitrary targets. Focus on metrics that matter to your business and make steady progress instead of trying to change everything at once.

Instead of saying "improve deployment frequency by 50%," try "reduce the manual steps in our deployment process from 8 to 4 over the next quarter." Instead of "cut lead time in half," consider "eliminate the 2-day code review bottleneck by implementing pair programming for critical changes."

The key is to avoid chasing numbers for their own sake. Instead, focus on understanding what the metrics mean for your team and your business outcomes. The goal isn't to artificially inflate your DORA metrics by incentivizing the wrong behaviors. The spirit of DORA research is to understand where problems exist, attempt to fix them systematically, and then observe whether the metrics reflect those improvements.

Implementing changes and managing expectations

When developing strategies for improvement, think about the underlying outcomes that matter behind each DORA metric. Rather than saying "we need to improve our Deployment Frequency," consider how you might make it easier for developers to deliver value quickly. Where are the bottlenecks? Where do developers experience pain? Where are teams waiting, and what is ultimately realistic given the context of that team and their work?

Here's a practical approach: Pick one metric that's clearly problematic. Ask your team: "What's the most frustrating part of getting code to production?" Then map that frustration to your DORA metric. If developers say "waiting for approvals," that's your lead time problem. If they say "deployments break things," that's your change failure rate. Start there.

Focusing on process gives you improvements that actually stick. If you make a change to solve a problem and subsequently see your DORA metrics improve, that's validation that your intervention was successful.

However, if the DORA metrics don't improve after a meaningful process change, that doesn't necessarily mean the change was ineffective.

Given the imperfect nature of automated DORA metrics collection, not every meaningful change will be perfectly reflected in the data. This is why Google uses surveys alongside automated metrics in their DORA research—what teams say and feel about their processes often captures improvements that quantitative metrics miss.

Here's the thing: DORA is a guide, not a silver bullet. There's no one-size-fits-all solution, so you'll need to manage expectations appropriately. A single product or intervention won't magically solve all your problems. When setting expectations internally or evaluating vendor claims, use the DORA research to stay focused on what actually matters instead of getting distracted by flashy promises.

Putting it all together: your DORA action plan

To actually improve your organization, you'll need to incorporate DORA into a broader approach to improving how you work. This means understanding your data and its limitations, setting meaningful goals tied to outcomes rather than metrics, and ensuring those goals and expectations align with both the intent and reality of DORA research.

Focus your implementation efforts on process improvement rather than metric optimization. Monitor your metrics continuously, but remember that the real value comes from learning and adapting along the way. The metrics themselves are simply a tool to help you understand whether your process improvements are having the intended effect.

This week, try this: Spend 30 minutes with your team asking "What slows us down?" Write down the top 3 answers. Then look at your DORA metrics and see which one might improve if you solved just one of those problems. Start with the easiest fix. The goal isn't perfect metrics—it's a team that can deliver value faster and more reliably.

Success with DORA metrics isn't about achieving perfect scores or matching industry benchmarks. It's about building a culture of continuous improvement where teams use data to identify problems, implement solutions, and measure the effectiveness of their efforts. When approached with this mindset, DORA metrics become a powerful tool for driving meaningful organizational change rather than just another dashboard to monitor.

.png)